Social media moderation tools are essential for managing online communities. They help keep platforms safe and engaging.

In today’s digital age, social media is a vital part of our lives. Businesses and individuals use it to connect, share, and grow. But with this comes challenges. Negative comments, spam, and inappropriate content can harm online communities. This is where social media moderation tools come in.

These tools help monitor and manage content, ensuring a positive experience for users. They filter out unwanted posts and maintain a healthy environment. By using these tools, you can protect your brand’s reputation and keep your community safe. Let’s explore why these tools are crucial and how they can benefit you.

Introduction To Social Media Moderation

Social media platforms have become essential in our daily lives. Billions of users share content every day. This creates a massive flow of information. Managing this information is crucial. Social media moderation helps keep the platforms safe and user-friendly.

Importance Of Moderation

Moderation ensures that harmful content does not spread. It protects users from offensive materials. This keeps the environment positive and safe. Good moderation builds trust. Users feel comfortable sharing their thoughts. Businesses also rely on this trust. They engage with their audience knowing the platform is secure.

Moderation also helps in maintaining brand reputation. Negative or harmful content can damage a brand’s image. Active moderation prevents this risk. It also ensures compliance with legal regulations. Different countries have different content laws. Moderation helps follow these laws.

Challenges In Moderation

Moderation is not always easy. The sheer volume of content is overwhelming. Millions of posts are made every second. This makes it hard to review everything.

There is also the issue of context. Some content is not clearly harmful. It may depend on cultural or social views. This makes moderation subjective and complex.

User privacy is another concern. Moderators need access to content. This can raise privacy issues. Balancing moderation and privacy is challenging.

False positives are also a problem. Sometimes, innocent content gets flagged. This can frustrate users. It can also lead to unnecessary restrictions.

Finally, there is the risk of bias. Moderators are human. They may have personal biases. This can affect their judgment. Automated tools help, but they are not perfect. They can also be biased.

Credit: commentguard.io

Types Of Moderation Tools

Social media moderation tools help manage and maintain online communities. These tools ensure a safe and friendly environment. There are different types of moderation tools available. They fall into two main categories: automated tools and manual tools.

Automated Tools

Automated tools use algorithms and AI to filter content. They can detect spam, offensive language, and inappropriate images. These tools work around the clock. They review and flag content in real-time. Automated tools are fast and efficient. They save time and reduce the workload of moderators.

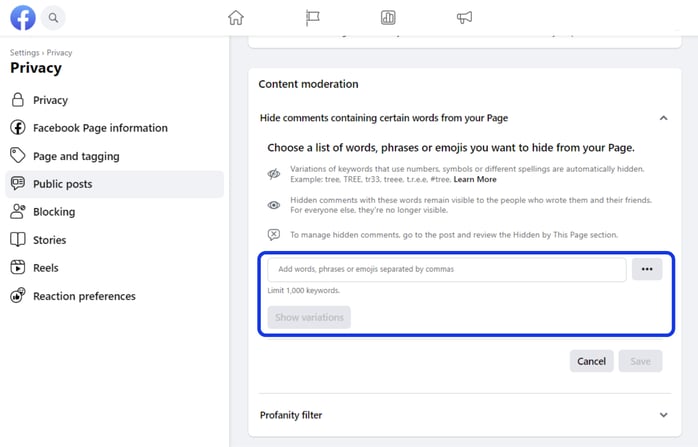

Examples of automated tools include keyword filters and image recognition software. Keyword filters block posts with banned words or phrases. Image recognition software scans for harmful images. These tools can also identify fake accounts. Automated tools are essential for large social media platforms.

Manual Tools

Manual tools involve human moderators reviewing content. They provide a personal touch and context understanding. Human moderators handle complex situations better. They can make nuanced decisions. Manual tools include reporting systems and review panels.

Users can report inappropriate content through reporting systems. Human moderators then review these reports. Review panels consist of experienced moderators. They discuss and decide on flagged content. Manual tools ensure accurate and fair moderation. They add a human perspective to the process.

Key Features Of Moderation Tools

Social media moderation tools are essential for managing online communities. They help maintain a positive environment by filtering harmful content. These tools also allow users to report issues. They use AI to enhance moderation efficiency.

Content Filtering

Content filtering is a crucial feature of moderation tools. It helps block inappropriate posts. This includes spam, offensive language, and illegal content. Filters can be customized to meet specific community needs. This ensures a safer online space for users.

User Reporting

User reporting empowers the community to flag problematic content. It allows users to report abusive behavior or spam. Reports are then reviewed by moderators. This collaborative approach enhances the overall safety of the platform.

Ai And Machine Learning

AI and machine learning improve the moderation process. They analyze patterns and predict potential issues. These technologies can detect harmful content faster than humans. They also learn and adapt over time. This makes moderation more effective and efficient.

Credit: blog.brandbastion.com

Popular Moderation Tools

Social media moderation tools help manage online communities. They ensure content follows guidelines. Below are some popular moderation tools that can assist in maintaining a safe and engaging online environment.

Tool A Overview

Tool A is known for its user-friendly interface. It offers various features to monitor and control content. Key features include:

- Automatic Filtering: Blocks inappropriate content.

- Real-Time Alerts: Notifies moderators about suspicious activity.

- Customizable Settings: Tailors moderation to your community needs.

Tool A helps in keeping your online space secure and welcoming.

Tool B Overview

Tool B focuses on AI-driven moderation. It uses machine learning to identify harmful content. Features include:

- AI Detection: Recognizes offensive language and images.

- Community Feedback: Allows users to report issues.

- Analytics: Provides insights on community behavior.

Tool B is ideal for larger communities that need robust moderation.

Tool C Overview

Tool C offers comprehensive moderation with a focus on user interaction. It includes:

- Interactive Dashboard: Easy access to moderation tools.

- User Engagement: Encourages positive interactions.

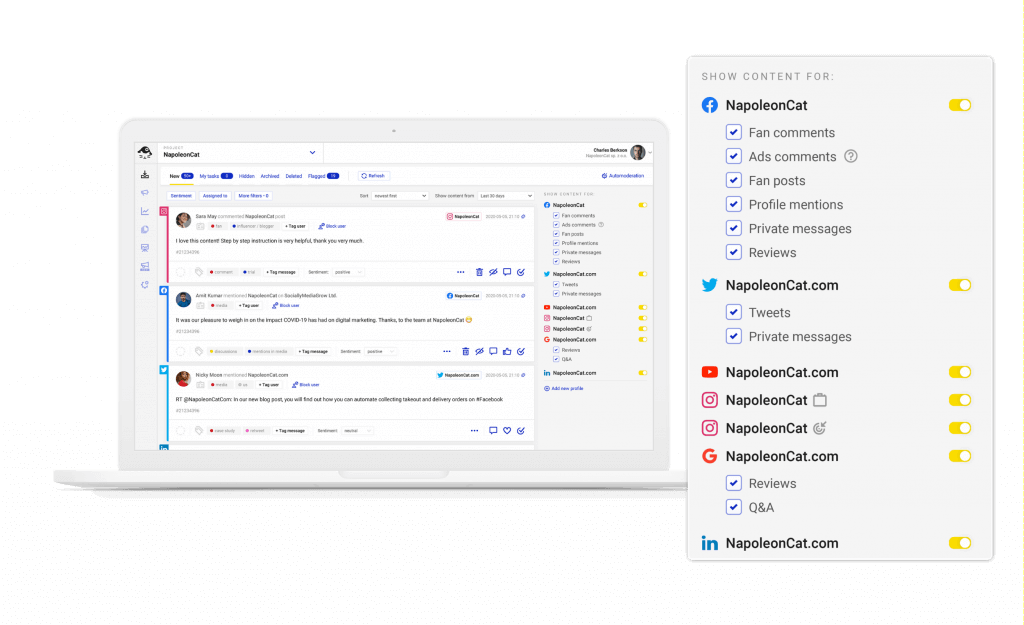

- Integration: Works with various social media platforms.

Tool C is perfect for communities aiming to foster positive engagement.

Implementing Moderation Tools

Implementing moderation tools is crucial for maintaining a safe online community. These tools help to filter out unwanted content and ensure a positive user experience. There are several steps involved in implementing these tools effectively.

Choosing The Right Tool

Choosing the right moderation tool is the first step. Start by identifying your specific needs. Some tools focus on filtering offensive language. Others may help in blocking spam or unwanted advertisements. Understand your platform’s requirements before making a choice. Research different tools and read reviews. Compare features and pricing. Select a tool that fits your budget and meets your moderation needs.

Integration With Platforms

Integration with platforms is the next critical step. Ensure the moderation tool works seamlessly with your social media platforms. Check compatibility with major platforms like Facebook, Twitter, and Instagram. Look for tools that offer easy setup and minimal downtime. Test the integration thoroughly. Make sure all features work as expected. Train your team on how to use the new tool. This ensures a smooth transition and effective moderation.

Effectiveness Of Moderation Tools

Social media moderation tools play a crucial role in maintaining online communities. They help filter inappropriate content, manage user interactions, and ensure a safe environment. By using these tools, brands can protect their reputation and provide a better user experience.

Success Stories

Many companies have seen great results with moderation tools. For instance, a leading e-commerce platform reduced offensive comments by 80% within six months. This improvement led to a more positive community and increased user engagement.

Another example is an online forum that used automated moderation to handle spam. The forum’s user satisfaction scores increased by 30% after implementing the tool. These success stories highlight the potential benefits of moderation tools.

Limitations And Drawbacks

While moderation tools offer many benefits, they are not perfect. One common issue is the risk of false positives. Sometimes, legitimate content gets flagged as inappropriate. This can frustrate users and lead to negative feedback.

Another limitation is the need for human oversight. Automated tools can miss context and nuance. This is why a balance between automated systems and human moderators is essential. Relying solely on tools can lead to errors and dissatisfaction.

| Factor | Details |

|---|---|

| False Positives | Legitimate content may be incorrectly flagged. |

| Human Oversight | Tools need human review for context. |

Understanding these limitations helps in better implementation and usage of moderation tools. The key is to use them as part of a broader strategy. Combining technology with human judgment can create a more effective moderation process.

Future Of Moderation Tools

The future of moderation tools looks promising with the rise of advanced technology. Social media platforms need to ensure safe and respectful interactions. As the internet evolves, so must the tools used to maintain order and civility in online spaces. Let’s explore the key areas shaping the future of these tools.

Technological Advancements

Technological advancements are driving the evolution of moderation tools. Artificial intelligence (AI) and machine learning (ML) play a big role. These technologies help in detecting and managing inappropriate content faster than ever before.

For instance, AI algorithms can scan text, images, and videos. They identify harmful content quickly. This reduces the need for manual review. Here are some key advancements:

- Natural Language Processing (NLP): Understanding the context of conversations.

- Image Recognition: Detecting offensive images.

- Real-time Monitoring: Immediate action on inappropriate content.

These advancements help in maintaining a safe online environment. They also allow platforms to scale their moderation efforts efficiently.

Evolving Community Standards

Community standards are always changing. What was acceptable a few years ago might not be today. Thus, moderation tools must adapt to these evolving norms.

Platforms regularly update their guidelines to reflect current values. This means moderation tools need to be flexible. They should accommodate these changes quickly. Here are some factors influencing community standards:

- Cultural Sensitivity: Respecting diverse backgrounds and perspectives.

- Legal Regulations: Complying with laws in different regions.

- User Feedback: Listening to the community’s voice.

Maintaining a balance between freedom of expression and safety is crucial. Moderation tools play a key role in achieving this balance.

Best Practices For Community Safety

Ensuring community safety on social media is crucial. Moderation tools play a key role. They help maintain a positive environment. Following best practices can enhance their effectiveness. This keeps online spaces safe and welcoming.

Regular Updates And Monitoring

Keep your moderation tools up to date. New threats emerge daily. Regular updates protect against these. Consistent monitoring is also essential. Stay vigilant for any harmful content. This helps prevent issues before they escalate.

Engaging The Community

Encourage community members to report inappropriate behavior. They are your first line of defense. Foster a culture of respect and responsibility. This makes users feel valued and safe. Respond to their concerns promptly. Show that you take their safety seriously.

Promote positive interactions. Highlight good behavior and contributions. This sets a standard for others to follow. Positive reinforcement can shape community norms. It makes the online space more enjoyable for everyone.

Credit: napoleoncat.com

Frequently Asked Questions

What Are Social Media Moderation Tools?

Social media moderation tools help manage and filter user-generated content. They ensure community guidelines are followed and maintain a positive online environment.

Why Use Social Media Moderation Tools?

Using moderation tools helps protect your brand’s reputation. They prevent spam, offensive content, and ensure a positive user experience.

Which Features Do Moderation Tools Offer?

Moderation tools offer features like automatic filtering, keyword monitoring, and user activity tracking. They also provide reporting and analytics.

How Do Moderation Tools Improve Engagement?

Moderation tools improve engagement by creating a safe and welcoming space. This encourages user interaction and builds trust in your platform.

Conclusion

Social media moderation tools are essential in today’s digital world. They help maintain a positive online environment. These tools can filter out harmful content. They also save time and effort for moderators. Choosing the right tool can make a big difference.

Keep your community safe and engaging. Consider your needs and budget. Invest in a tool that fits best. Stay proactive in managing your social media platforms.